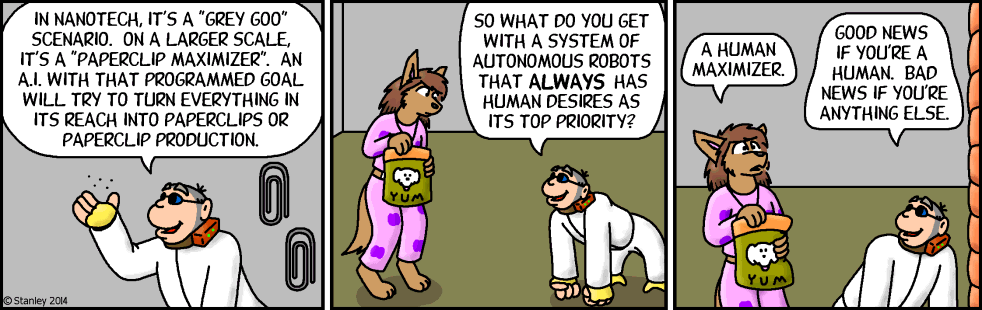

AI and the paperclip problem

Philosophers have speculated that an AI tasked with a task such as creating paperclips might cause an apocalypse by learning to divert ever-increasing resources to the task, and then learning how to resist our attempts to turn it off. But this column argues that, to do this, the paperclip-making AI would need to create another AI that could acquire power both over humans and over itself, and so it would self-regulate to prevent this outcome. Humans who create AIs with the goal of acquiring power may be a greater existential threat.

The Paperclip Maximiser Theory: A Cautionary Tale for the Future

AI's Deadly Paperclips

Are You a Divergent Thinker? Take This Simple Paper Clip Test to Find Out

Chris Albon (@chrisalbon) on Threads

Squiggle Maximizer (formerly Paperclip maximizer) - LessWrong

Stuart Armstrong on LinkedIn: At one of the oldest debate

EN / Freefall 2537

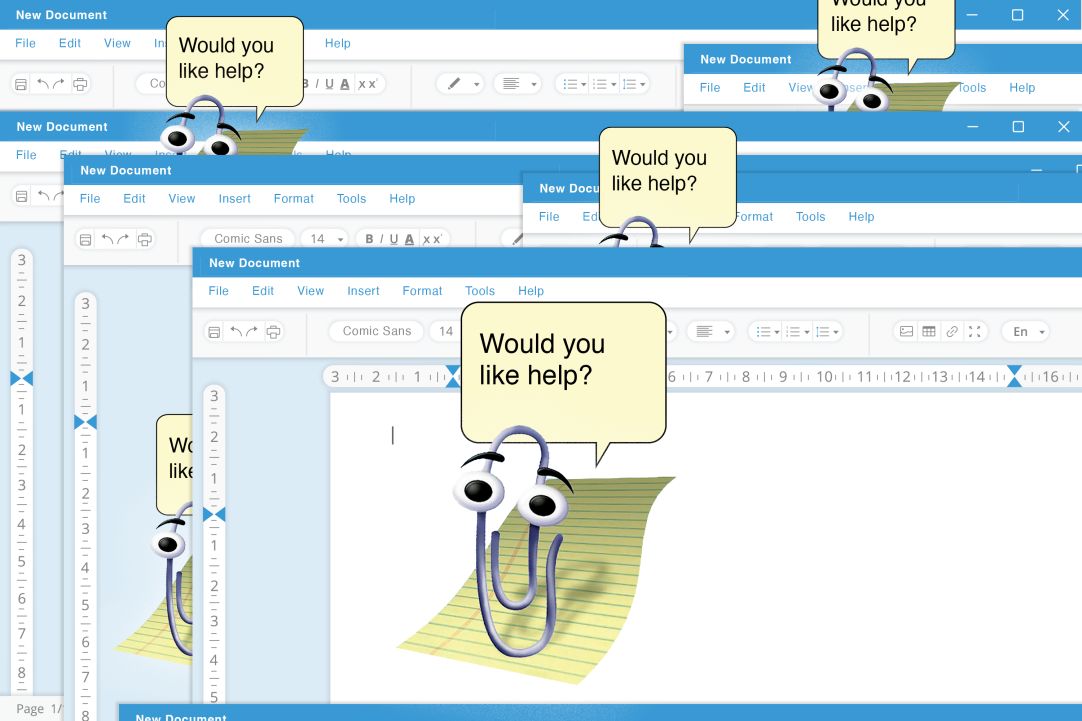

The Twisted Life of Clippy

Bing! search, the downfall of humanity

Watson - What the Daily WTF?

Making Ethical AI and Avoiding the Paperclip Maximizer Problem

A sufficiently paranoid paperclip maximizer — LessWrong

How An AI Asked To Produce Paperclips Could End Up Wiping Out

Exploring the 'Paperclip Maximizer': A Test of AI Ethics with ChatGPT