Drones, Free Full-Text

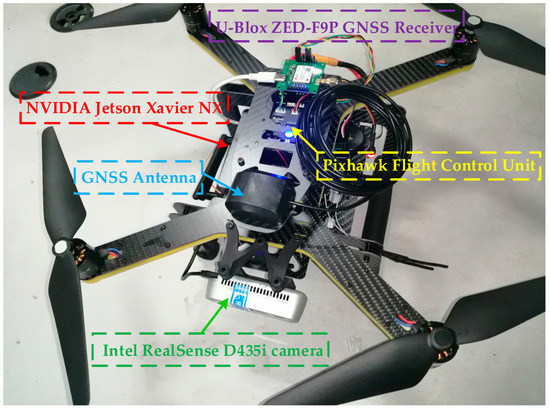

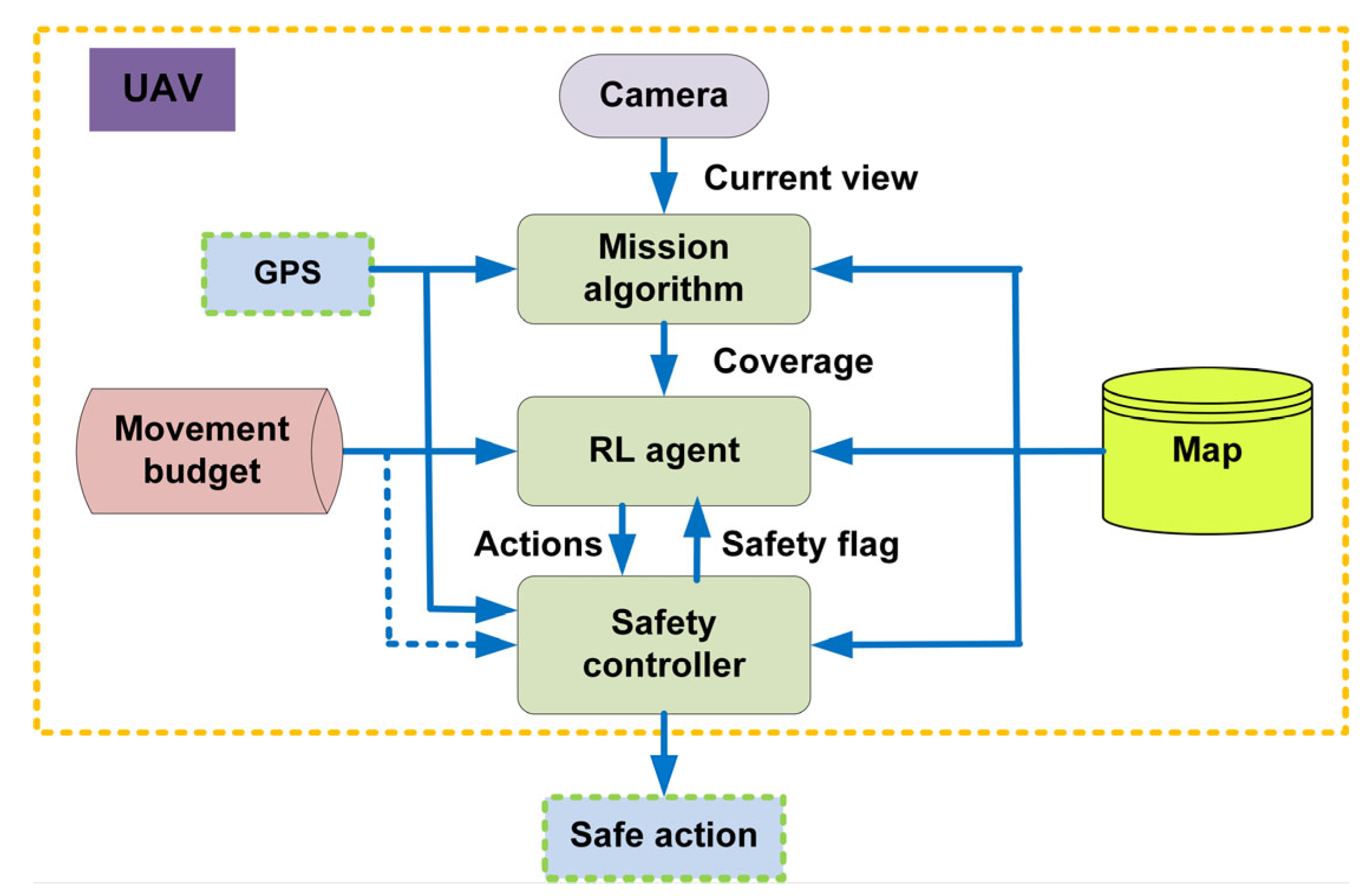

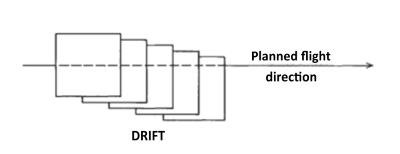

Self-localization and state estimation are crucial capabilities for agile drone autonomous navigation. This article presents a lightweight and drift-free vision-IMU-GNSS tightly coupled multisensor fusion (LDMF) strategy for drones’ autonomous and safe navigation. The drone is carried out with a front-facing camera to create visual geometric constraints and generate a 3D environmental map. Ulteriorly, a GNSS receiver with multiple constellations support is used to continuously provide pseudo-range, Doppler frequency shift and UTC time pulse signals to the drone navigation system. The proposed multisensor fusion strategy leverages the Kanade–Lucas algorithm to track multiple visual features in each input image. The local graph solution is bounded in a restricted sliding window, which can immensely predigest the computational complexity in factor graph optimization procedures. The drone navigation system can achieve camera-rate performance on a small companion computer. We thoroughly experimented with the LDMF system in both simulated and real-world environments, and the results demonstrate dramatic advantages over the state-of-the-art sensor fusion strategies.

How Do Drones Work And What Is Drone Technology - DroneZon

Drones Free Full-Text A Visual Aquaculture System Using A Cloud

Best Drones for 2024 - CNET

i.ytimg.com/vi/kZRXH9uNhoA/maxresdefault.jpg

T3 Drone Control Tower - HEISHA robot charging station

Drones, Free Full-Text

Drones, Free Full-Text

Drones, Free Full-Text

Remote Sensing, Free Full-Text, Using Unmanned Aerial Vehicles

Remote GeoSystems Launches All-New Video GeoTagger FREE & PRO with Video Mapping Support for DJI Drones, Other UAV Systems and Action Cameras – sUAS News – The Business of Drones

Drones Free Full-Text Flying Free: A Research Overview Of Deep Learning In Drone Navigation Autonomy

/product/71/3959151/1.jpg?4783)

/product/71/3959151/1.jpg?4783)