Learn how to fine-tune Llama 2 with LoRA (Low Rank Adaptation) for question answering. This guide will walk you through prerequisites and environment setup, setting up the model and tokenizer, and quantization configuration.

Fine-Tuning Open-Source Language Models: A Step-by-Step Guide

Fine-tuning Large Language Models (LLMs) using PEFT

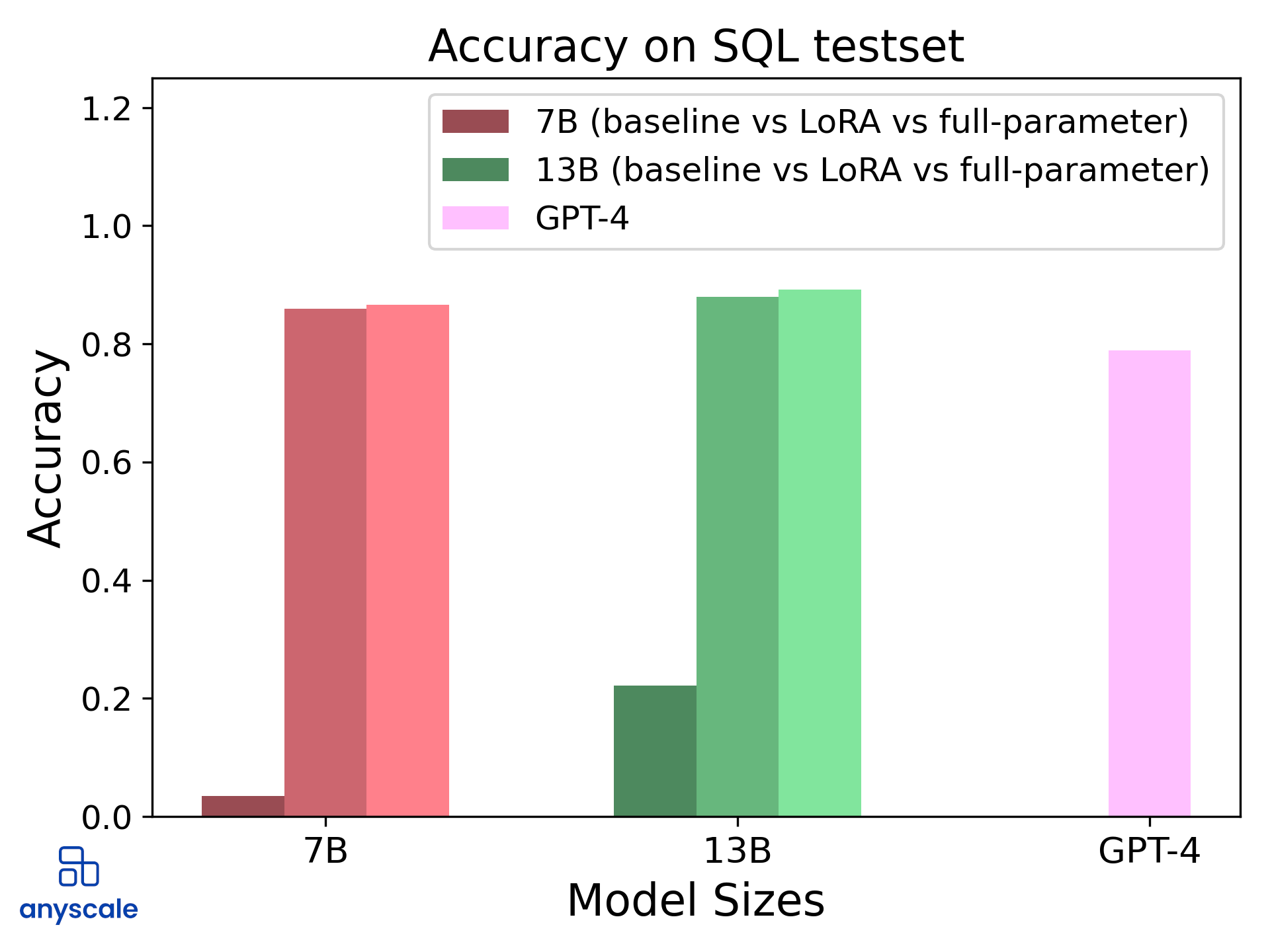

The Ultimate Guide to Fine-Tune LLaMA 2, With LLM Evaluations

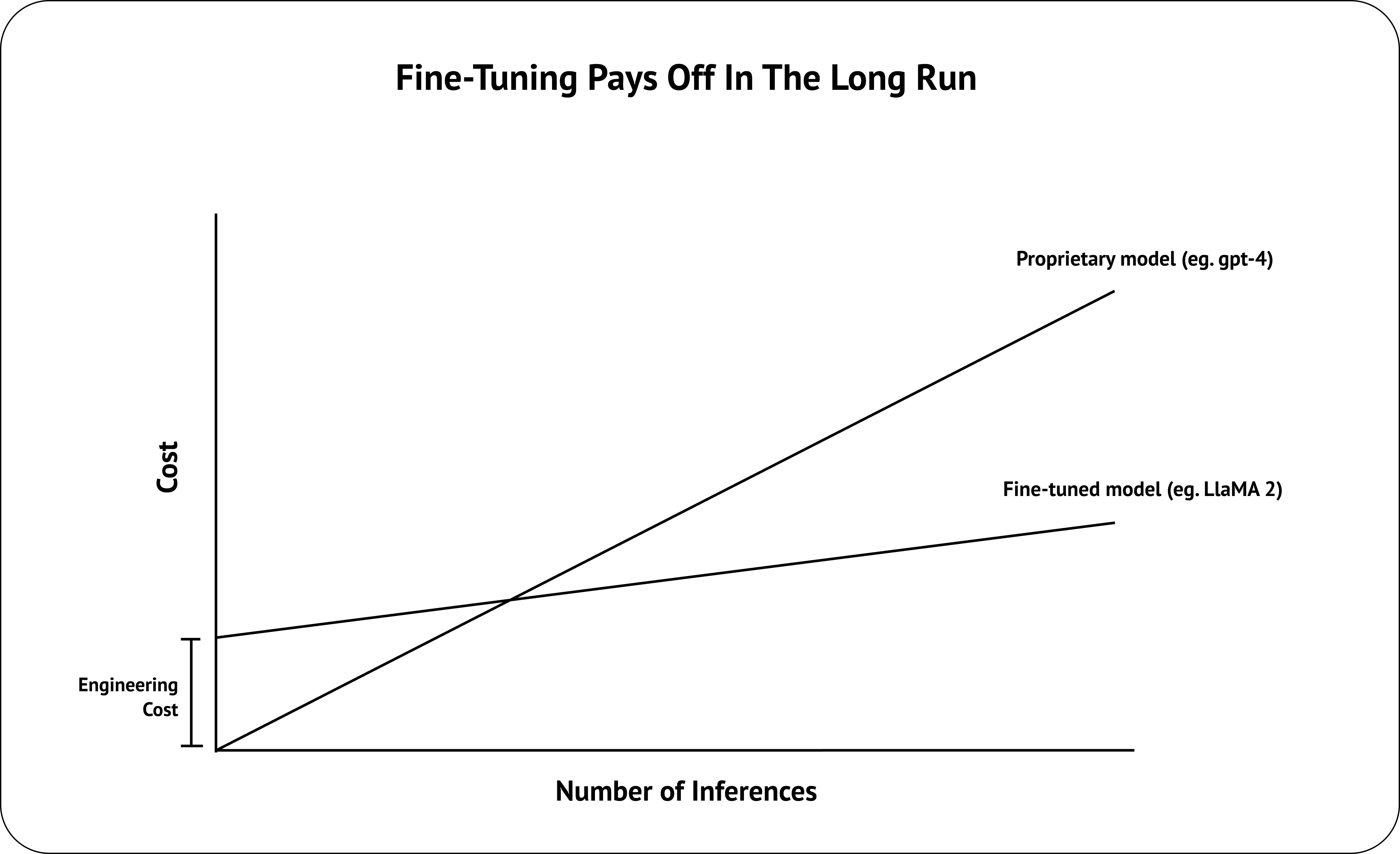

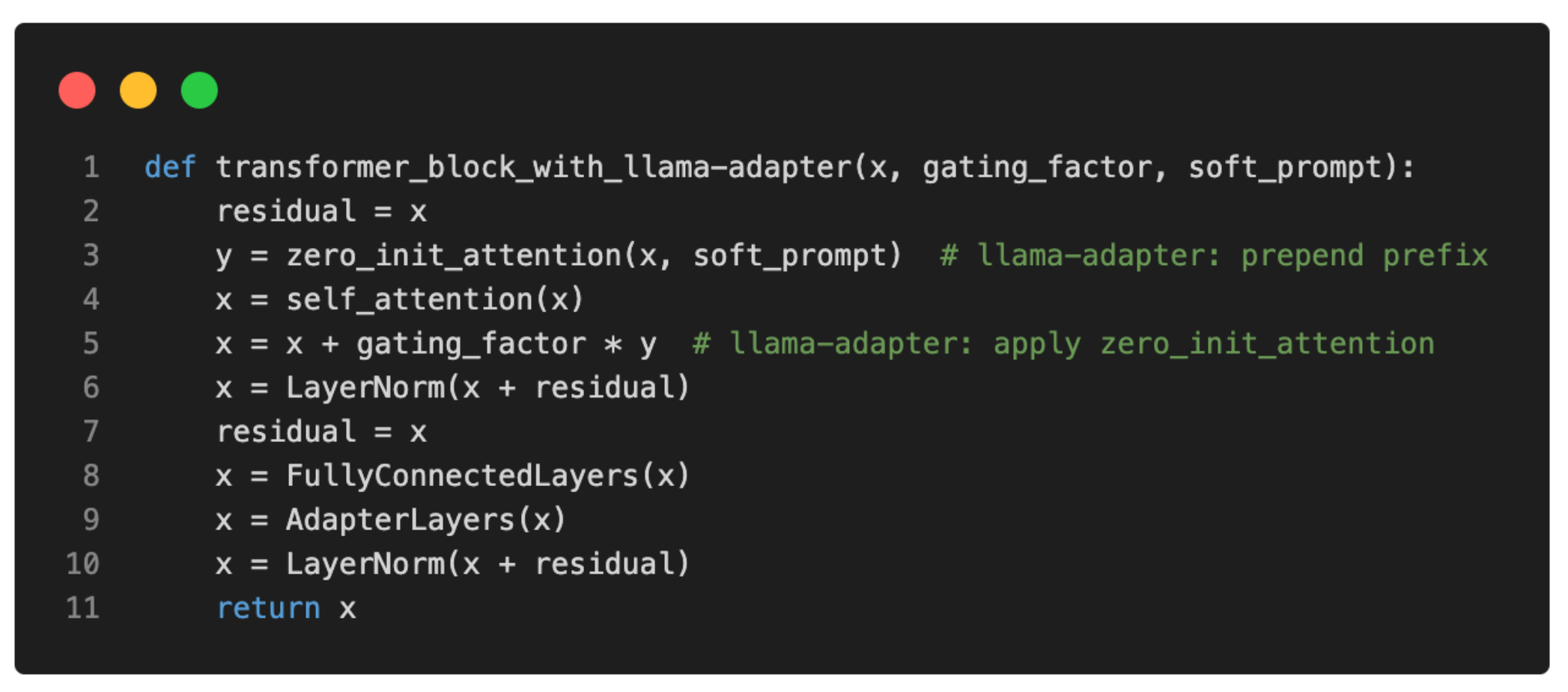

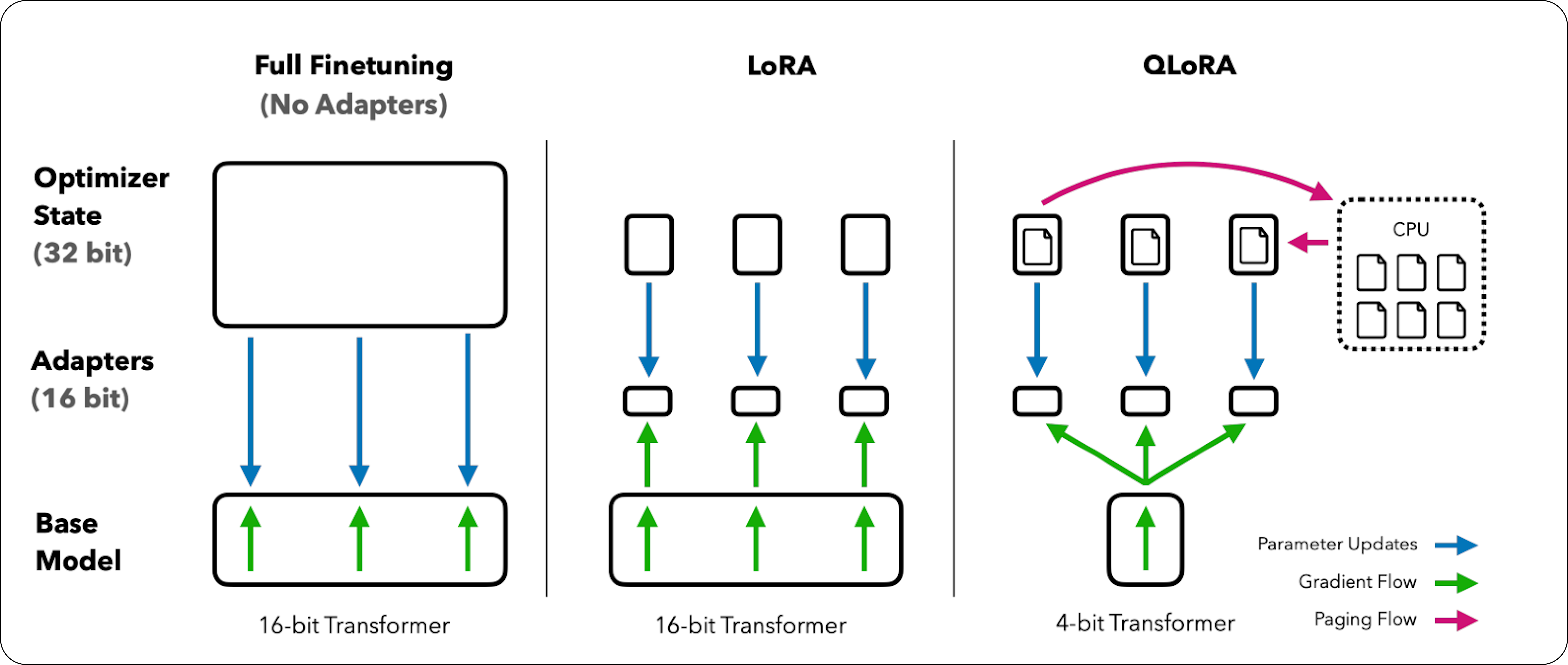

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Abhishek Mungoli on LinkedIn: LLAMA-2 Open-Source LLM: Custom Fine

arxiv-sanity

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

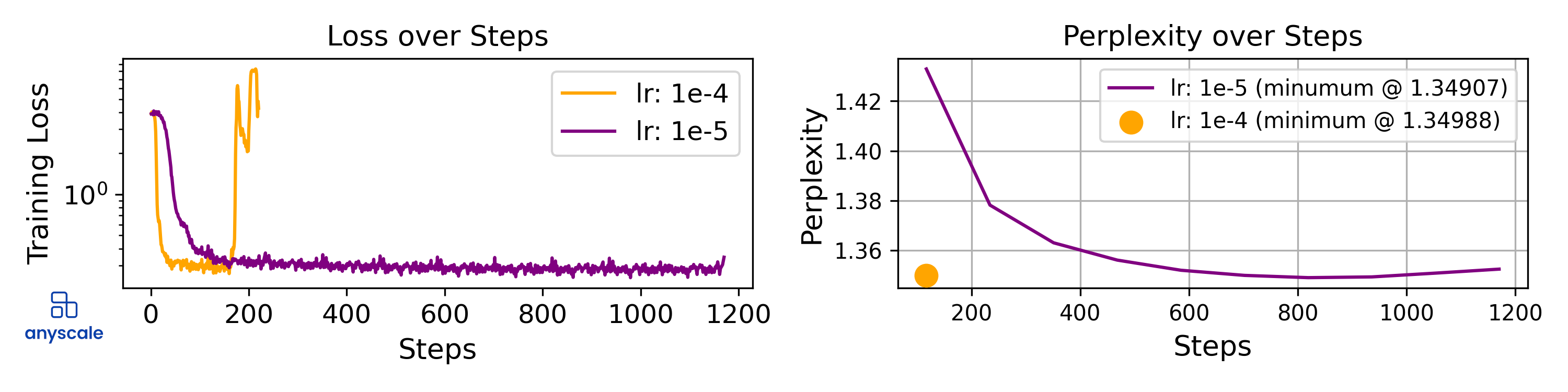

Fine-Tuning LLMs: In-Depth Analysis with LLAMA-2

GitHub - peggy1502/Amazing-Resources: List of references and online resources related to data science, machine learning and deep learning.

Understanding Parameter-Efficient Finetuning of Large Language

The Ultimate Guide to Fine-Tune LLaMA 2, With LLM Evaluations

Webinar: How to Fine-Tune LLMs with QLoRA

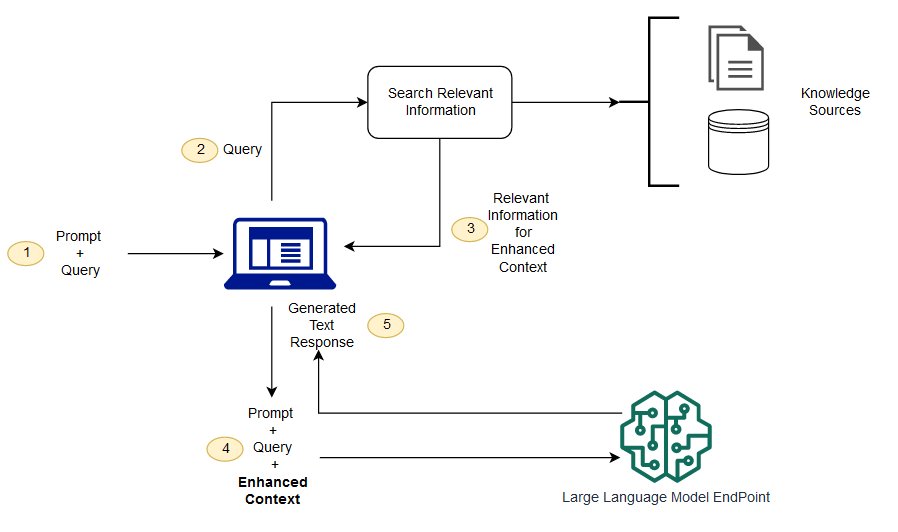

Enhancing Large Language Model Performance To Answer Questions and

Fine-Tuning LLMs: In-Depth Analysis with LLAMA-2