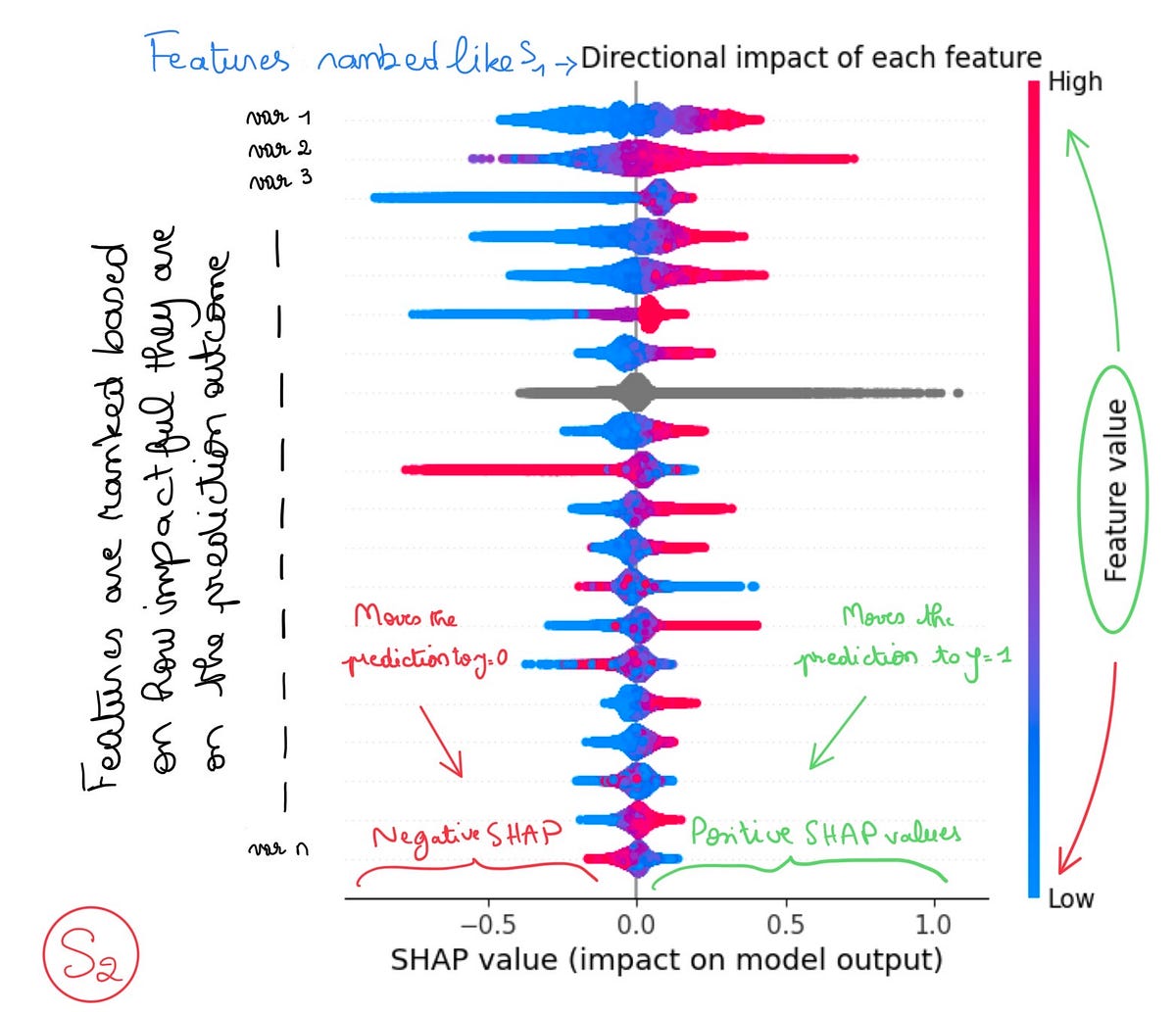

An Introduction to SHAP Values and Machine Learning Interpretability

Relation between prognostics predictor evaluation metrics and local interpretability SHAP values - ScienceDirect

Supervised Clustering: Cluster Analysis Using SHAP Values

PDF) Interpretation of machine learning models using shapley values: application to compound potency and multi-target activity predictions

Machine Learning Tutorials, Read The Latest About ML & AI

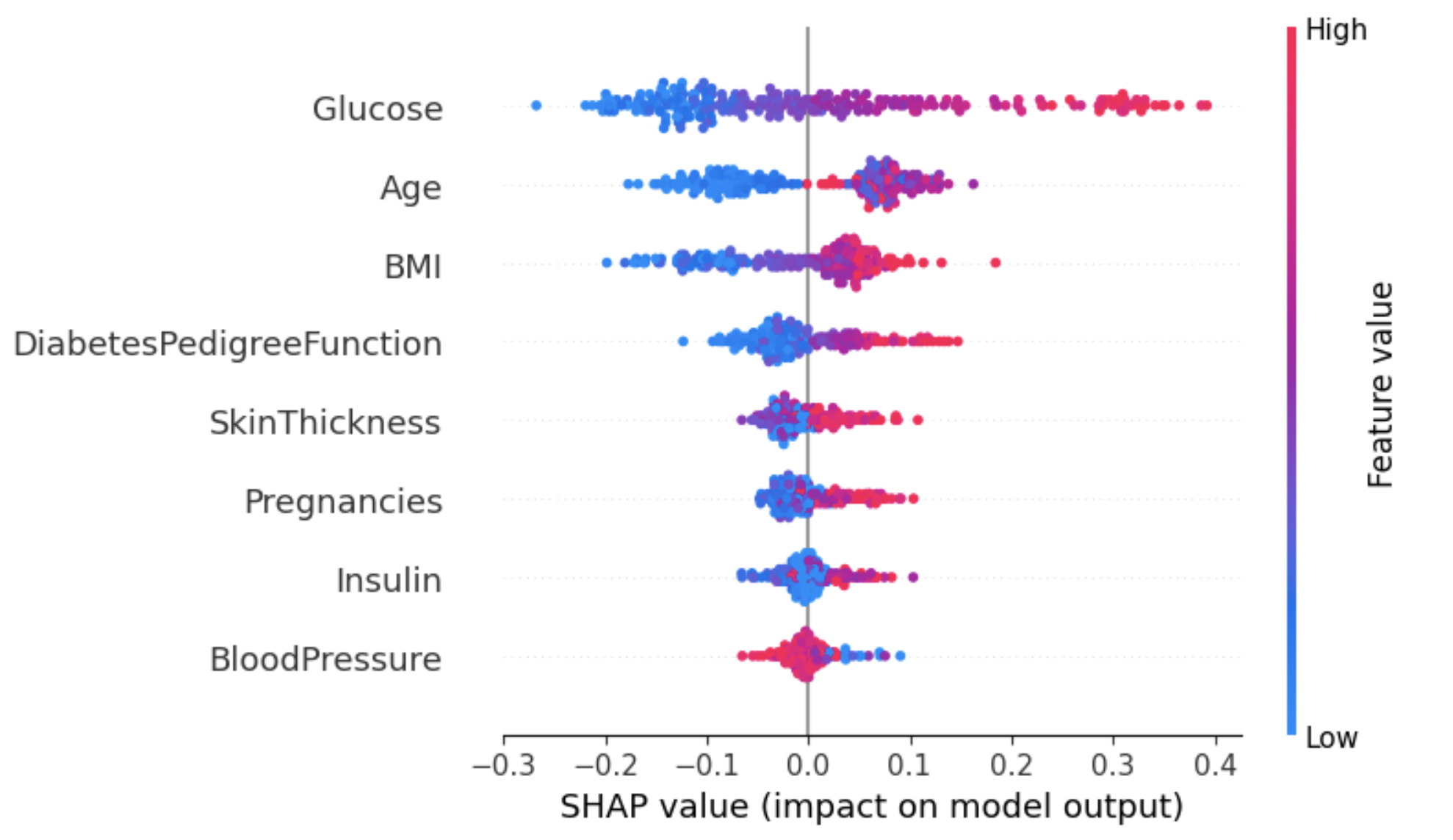

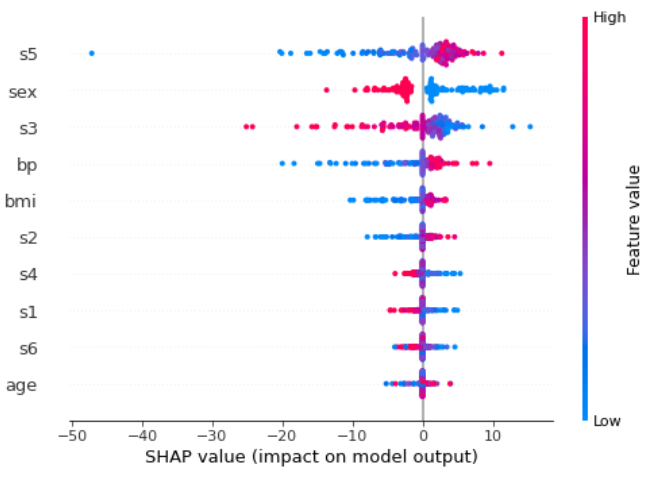

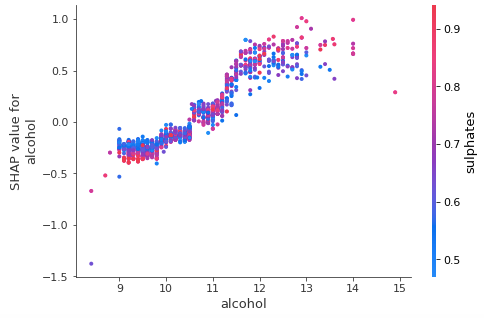

How to explain your machine learning model using SHAP?, by Dan Lantos, Advancing Analytics

6 – Interpretability – Machine Learning Blog, ML@CMU

Relation between prognostics predictor evaluation metrics and local interpretability SHAP values - ScienceDirect

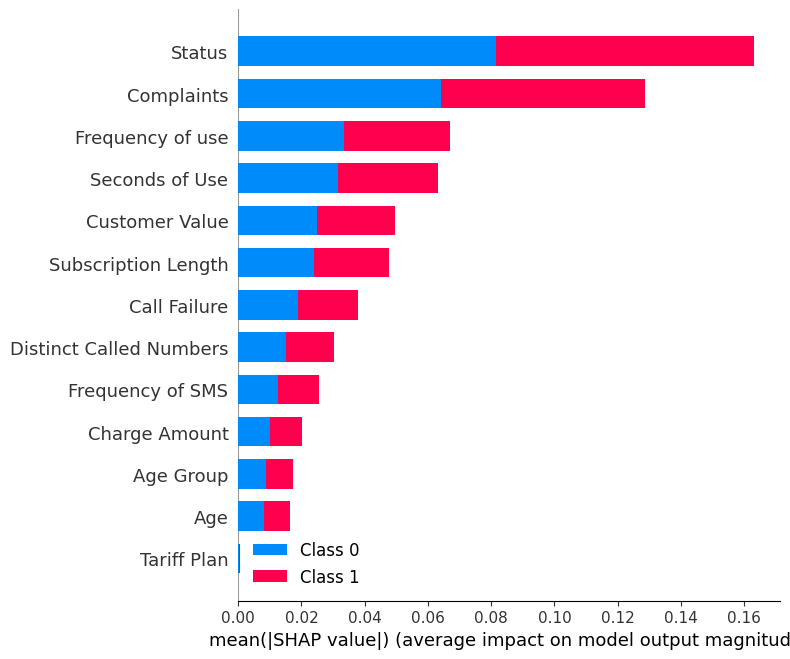

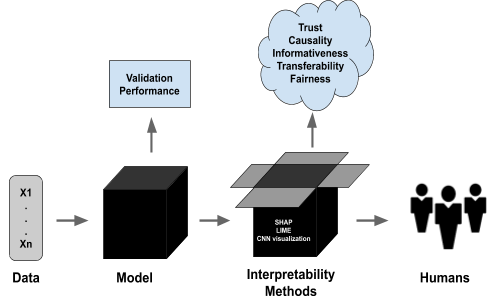

Explainable AI, LIME & SHAP for Model Interpretability, Unlocking AI's Decision-Making

How to explain neural networks using SHAP

Explain Your Model with the SHAP Values, by Chris Kuo/Dr. Dataman, Dataman in AI

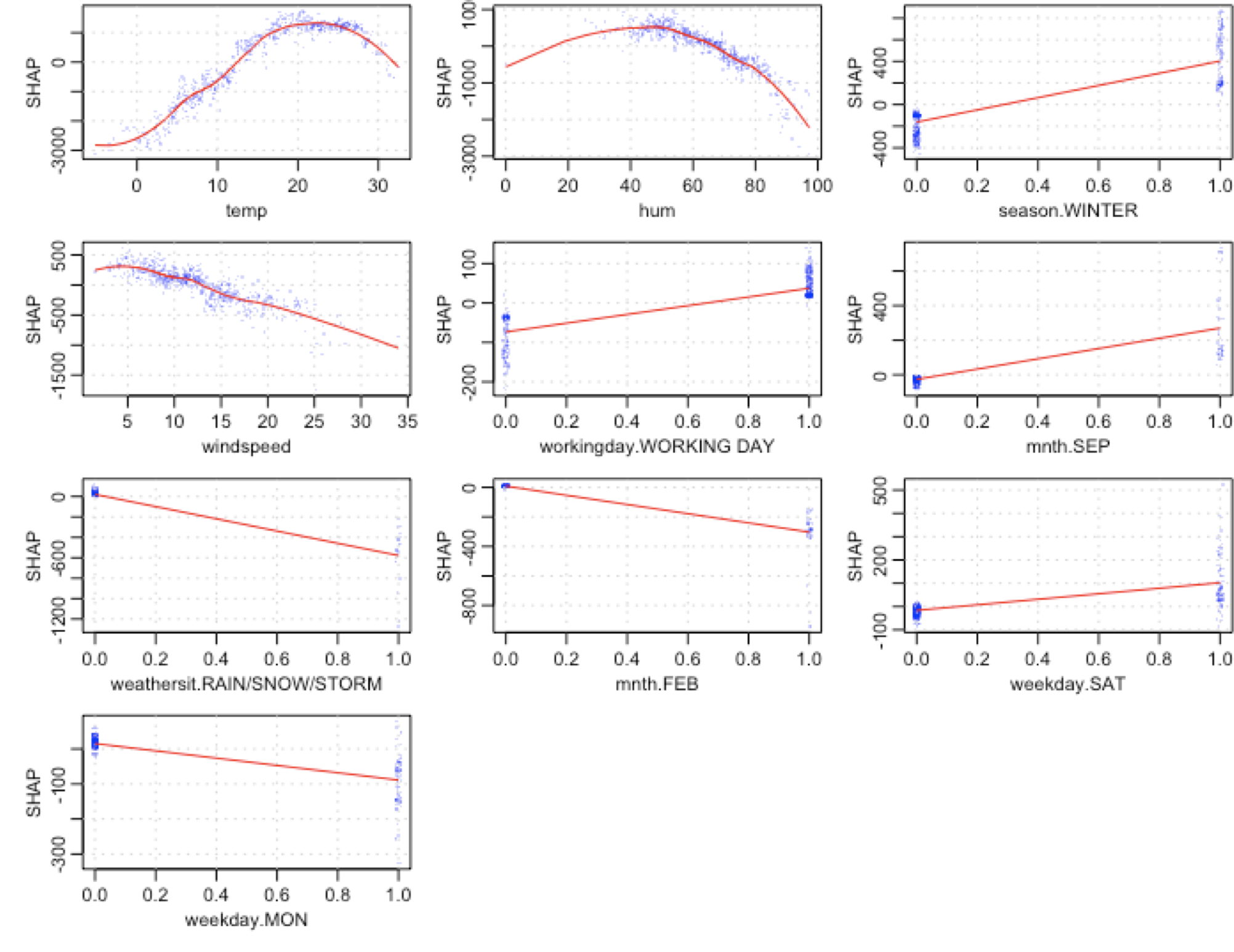

A gentle introduction to SHAP values in R

Feature Importance Analysis with SHAP I Learned at Spotify (with the Help of the Avengers), by Khouloud El Alami

The Shapley Value for ML Models. What is a Shapley value, and why is it…, by Divya Gopinath

Introduction to SHAP Values and their Application in Machine Learning, by Reza Bagheri