DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Last month, the DeepSpeed Team announced ZeRO-Infinity, a step forward in training models with tens of trillions of parameters. In addition to creating optimizations for scale, our team strives to introduce features that also improve speed, cost, and usability. As the DeepSpeed optimization library evolves, we are listening to the growing DeepSpeed community to learn […]

DeepSpeed: Extreme-scale model training for everyone - Microsoft Research

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

the comparison of test and training time of benchmark network

Announcing the DeepSpeed4Science Initiative: Enabling large-scale scientific discovery through sophisticated AI system technologies –

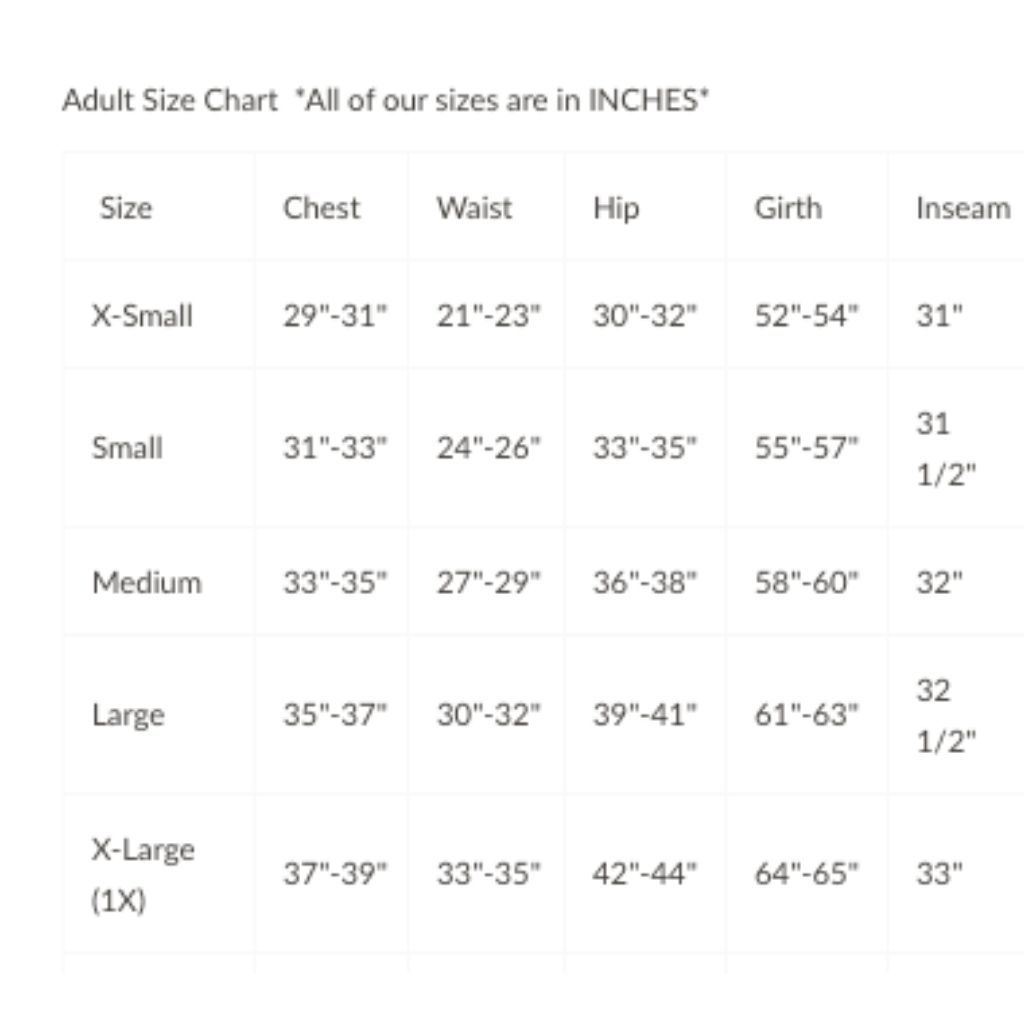

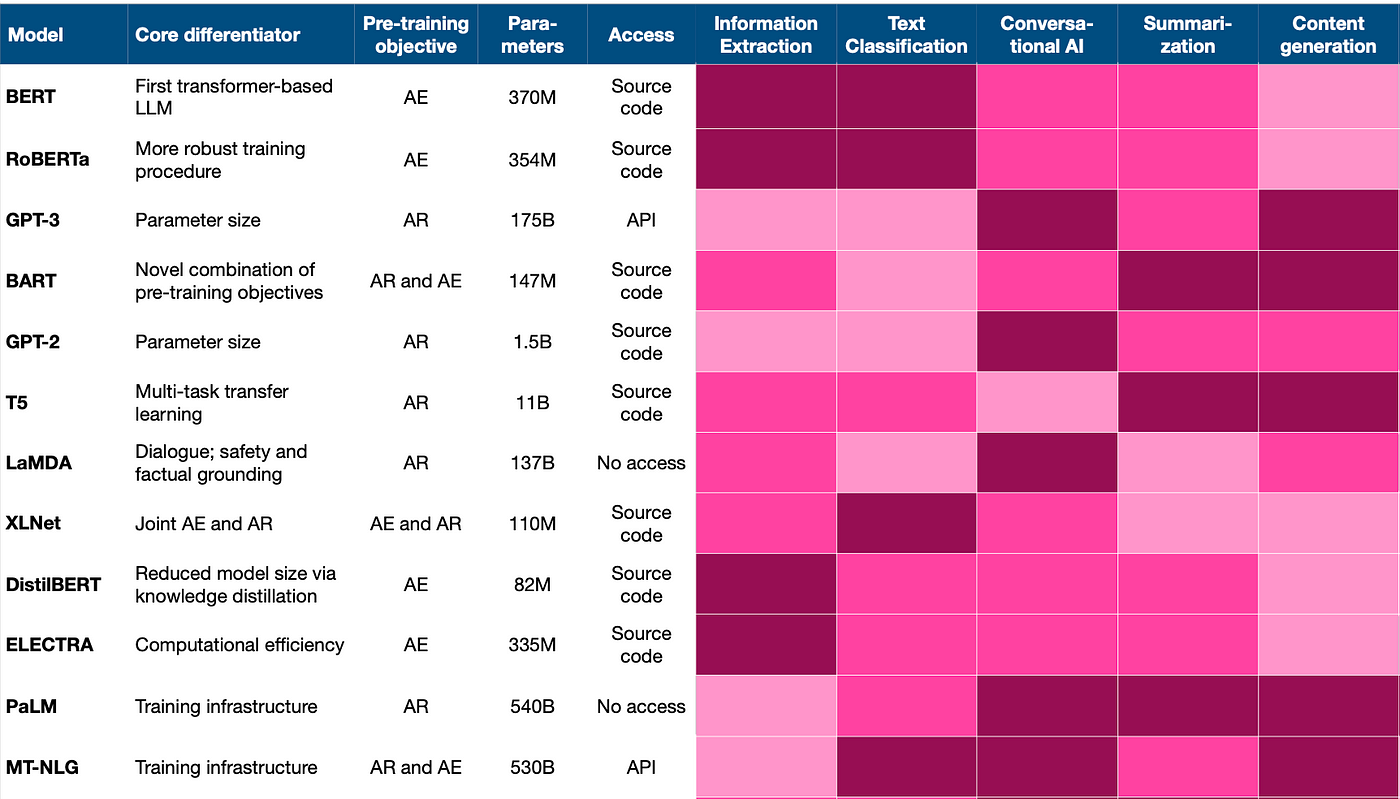

Pre-Trained Language Models and Their Applications - ScienceDirect

DeepSpeed: Extreme-scale model training for everyone - Microsoft Research

Kwai, Kuaishou & ETH Zürich Propose PERSIA, a Distributed Training System That Supports Deep Learning-Based Recommenders of up to 100 Trillion Parameters

9 libraries for parallel & distributed training/inference of deep learning models, by ML Blogger

DeepSpeed: Advancing MoE inference and training to power next-generation AI scale - Microsoft Research

miro.medium.com/v2/resize:fit:1400/1*EDndx6q1g7C_d

Parallel intelligent computing: development and challenges