DeepSpeed: Accelerating large-scale model inference and training

DeepSpeed: Accelerating large-scale model inference and training

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software)

Samyam Rajbhandari (@samyamrb) / X

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software), by Liz Li

Deep Speed, PDF, Computer Architecture

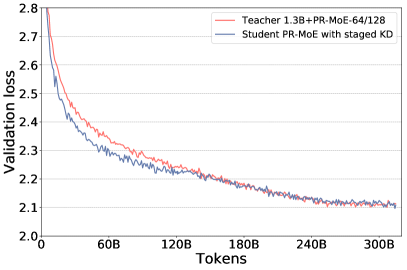

DeepSpeed-MoE: Advancing Mixture-of-Experts Inference and Training

2201.05596] DeepSpeed-MoE: Advancing Mixture-of-Experts Inference and Training to Power Next-Generation AI Scale

Introducing Audio Search by Length in Marketplace - Announcements - Developer Forum, library roblox music

Training your own ChatGPT-like model

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

Large Model Training and Inference with DeepSpeed // Samyam

How Mantium achieves low-latency GPT-J inference with DeepSpeed on

2201.05596] DeepSpeed-MoE: Advancing Mixture-of-Experts Inference and Training to Power Next-Generation AI Scale