DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

Cannot update part of the parameters in DistributedDataParallel

PyTorch 1.8 : ノート : 分散データ並列 (処理) – Transformers

Rethinking PyTorch Fully Sharded Data Parallel (FSDP) from First

Inplace error if DistributedDataParallel module that contains a

Error] [Pytorch] TypeError: '<' not supported between instances of

Issue for DataParallel · Issue #8637 · pytorch/pytorch · GitHub

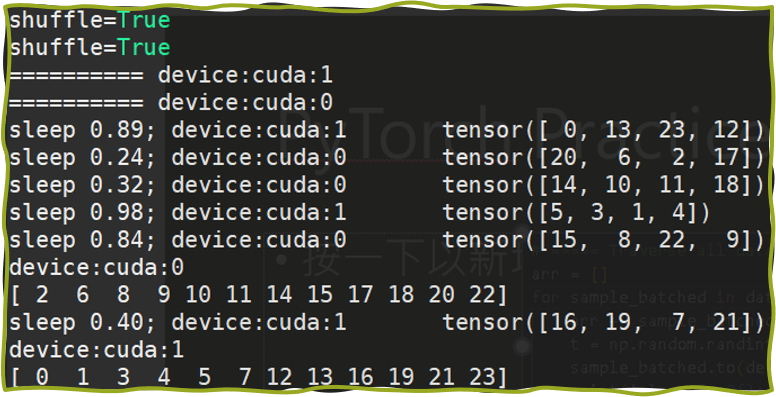

Distributed Data Parallel and Its Pytorch Example

Issue for DataParallel · Issue #8637 · pytorch/pytorch · GitHub

Achieving FP32 Accuracy for INT8 Inference Using Quantization

RuntimeError: Only Tensors of floating point and complex dtype can